About the Gecko Cluster

From HPC

(Difference between revisions)

(→Overview) |

(→Overview) |

||

| (5 intermediate revisions not shown) | |||

| Line 1: | Line 1: | ||

=Overview= | =Overview= | ||

| - | The cluster was was deliverded to the school at the end of the summer 2008. It was purchased from StreamLine Computing with SRIF funding, to provide a central HPC resource for research at the School. The service is hosted by IT Services in the Keppel Street building. | + | [[Image:Cluster-front.jpg|right|thumb|Gecko cluster, photo of cluster from front of rack]] The cluster was was deliverded to the school at the end of the summer 2008. It was purchased from StreamLine Computing with SRIF funding, to provide a central HPC resource for research at the School. The service is hosted by IT Services in the Keppel Street building. It has since been upgraded with some newer hardware and various OS updates. |

| - | The | + | The OS running on the cluster is Scientific/Centos 7 linux and the Job Scheduler is Open Grid Scheduler (previously Sun Grid Engine). |

A quick over view: | A quick over view: | ||

| - | * | + | * Scientific Linux 7 |

| - | * | + | * 18 Nodes |

| - | * | + | ** 18 nodes with between 32GB-256GB RAM and 8-32 CPU cores (a mixture of Intel and AMD CPUs) |

| - | + | * Each user has 200GB of storage by default | |

| - | * OpenMP for single node multi core/processor parallel computation and OpenMPI ( | + | * OpenMP for single node multi core/processor parallel computation and OpenMPI ( gcc) for parallel computation across the cluster |

| - | + | ||

| - | + | ||

| - | + | ||

Please [[Available Software]] page for a more complete of software on the cluster. | Please [[Available Software]] page for a more complete of software on the cluster. | ||

| Line 20: | Line 18: | ||

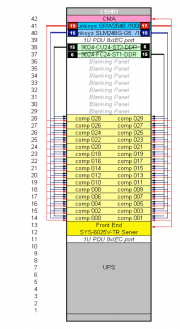

[[Image:Gecko-cluster-layout.png|left|thumb|Gecko cluster, rack layout]] | [[Image:Gecko-cluster-layout.png|left|thumb|Gecko cluster, rack layout]] | ||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | + | [[Image:Cluster-back.jpg|left|thumb|Gecko cluster, picture from the back of the rack]] | |

[[Category:About]] | [[Category:About]] | ||

Current revision as of 19:22, 20 June 2019

Overview

The cluster was was deliverded to the school at the end of the summer 2008. It was purchased from StreamLine Computing with SRIF funding, to provide a central HPC resource for research at the School. The service is hosted by IT Services in the Keppel Street building. It has since been upgraded with some newer hardware and various OS updates.The OS running on the cluster is Scientific/Centos 7 linux and the Job Scheduler is Open Grid Scheduler (previously Sun Grid Engine).

A quick over view:

- Scientific Linux 7

- 18 Nodes

- 18 nodes with between 32GB-256GB RAM and 8-32 CPU cores (a mixture of Intel and AMD CPUs)

- Each user has 200GB of storage by default

- OpenMP for single node multi core/processor parallel computation and OpenMPI ( gcc) for parallel computation across the cluster

Please Available Software page for a more complete of software on the cluster.