About the Gecko Cluster

From HPC

(→Overview) |

(→Overview) |

||

| Line 3: | Line 3: | ||

The cluster was was deliverded to the school at the end of the summer 2008. It was purchased from StreamLine Computing with SRIF funding, to provide a central HPC resource for research at the School. The service is hosted by IT Services in the Keppel Street building. | The cluster was was deliverded to the school at the end of the summer 2008. It was purchased from StreamLine Computing with SRIF funding, to provide a central HPC resource for research at the School. The service is hosted by IT Services in the Keppel Street building. | ||

| - | The cluster as delivered in 2008 consists of 30 compute nodes, 1 Head node, Ininfband and Gig Ethernet backbone. The os running on the cluster is Suse 10.1 (hence the gecko name) and the Job Scheduler is Sun Grid Engine 6. | + | The cluster as delivered in 2008 consists of 30 compute nodes, 1 Head node, Ininfband and Gig Ethernet backbone. The os running on the cluster is Suse 10.1 (hence the gecko name) and the Job Scheduler is Sun Grid Engine 6.1. |

A quick over view: | A quick over view: | ||

Revision as of 10:35, 5 November 2008

Contents |

Overview

The cluster was was deliverded to the school at the end of the summer 2008. It was purchased from StreamLine Computing with SRIF funding, to provide a central HPC resource for research at the School. The service is hosted by IT Services in the Keppel Street building.

The cluster as delivered in 2008 consists of 30 compute nodes, 1 Head node, Ininfband and Gig Ethernet backbone. The os running on the cluster is Suse 10.1 (hence the gecko name) and the Job Scheduler is Sun Grid Engine 6.1.

A quick over view:

- Suse 10.1 64bit Linux OS

- 30 compute nodes 2x 2.5GHz Quad Core Intel Xeons with 8GB of memory (i.e. 8 jobs with 1GB memory each per node)

- 3.7TB of user storage (shared across the cluster)

- Infiniband network for parallel computation

- OpenMP for single node multi core/processor parallel computation and OpenMPI (intel and gcc) for parallel computation across the cluster via the Inifiniband network.

- Sun Grid Engine 6 to manage job submission and queue management

- Intel C++/Fortran, GNU, Java 1.4,1.5,1.6 and R compilers

- Stata 10 se

Please Available Software page for a more complete of software on the cluster.

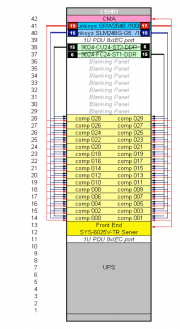

Hardware

Qlogic SilverStorm 9024 Infiniband

Ininiband DDR (20Gb/s) switches to connect compute nodes http://www.qlogic.com/Products/HPC_products_infibandswitches9024.aspx

Supermicro SYS-6015TB-iNFV Twin Server - Compute Node

1U chassis containing two Supermicro X7DBT-iNF motherboards. Each motherboard is fitted with 2x Intel 5420 2.5Ghz Quad Core Xeons (1333Mhz FSB), Intel 5000P chipset, 8x 2GB DDR2 667Mhz Fully Buffered Memory, 160GB SATA2 7.2K rpm 16MB Cache Western Digital HDD.

Supermicro SYS-6025V-TR Server - Head/Master Node

2U chassis. X7DVL-E Motherboard fitted with 1x Intel E5405 2Ghz Quad Core Xeon, 4x 2GB DDR2 667Mhz Fully Buffered Memory, 2x 250GB SATA2 HDD (system), 6x 1TB SATA2, 7.2K rpm, 16MB Western Digital HDD (user space).